he ground under enterprise learning is moving fast. AI learning tools have made knowledge findable, but haven't made capability automatic. Budgets are under pressure, hybrid teams are the norm, new managers are taking on larger spans of control, and regulators are paying closer attention to how organizations train on safety, privacy, and responsible AI.

In this environment, the best enterprise learning teams are shifting from “content factories” to “capability engines.” They are measured not by the number of courses shipped but by performance lift, time-to-productivity, and risk reduced.

Strategy that Survives Budget Reviews

A modern L&D strategy starts with the enterprise scoreboard. If your CEO and functional leaders don’t see a straight line from the learning plan to this year’s revenue, gross margin, NPS, risk posture, or operating efficiency, the plan won’t last long. Replace generic pillars with direct performance narratives that include the target audience, the critical tasks they perform, the observable gaps, and the operating metric that moves if the task improves.

To make this stick, take a capability-back approach. Map the few capabilities that drive competitive advantage, owning a discovery call, closing Tier-1 incidents, onboarding engineers to the deployment pipeline, managing via data rather than intuition, and decompose each capability into skills, knowledge, tools, and decision patterns. Then make those components discoverable, coachable, and measurable in the flow of work, not just in a course catalog.

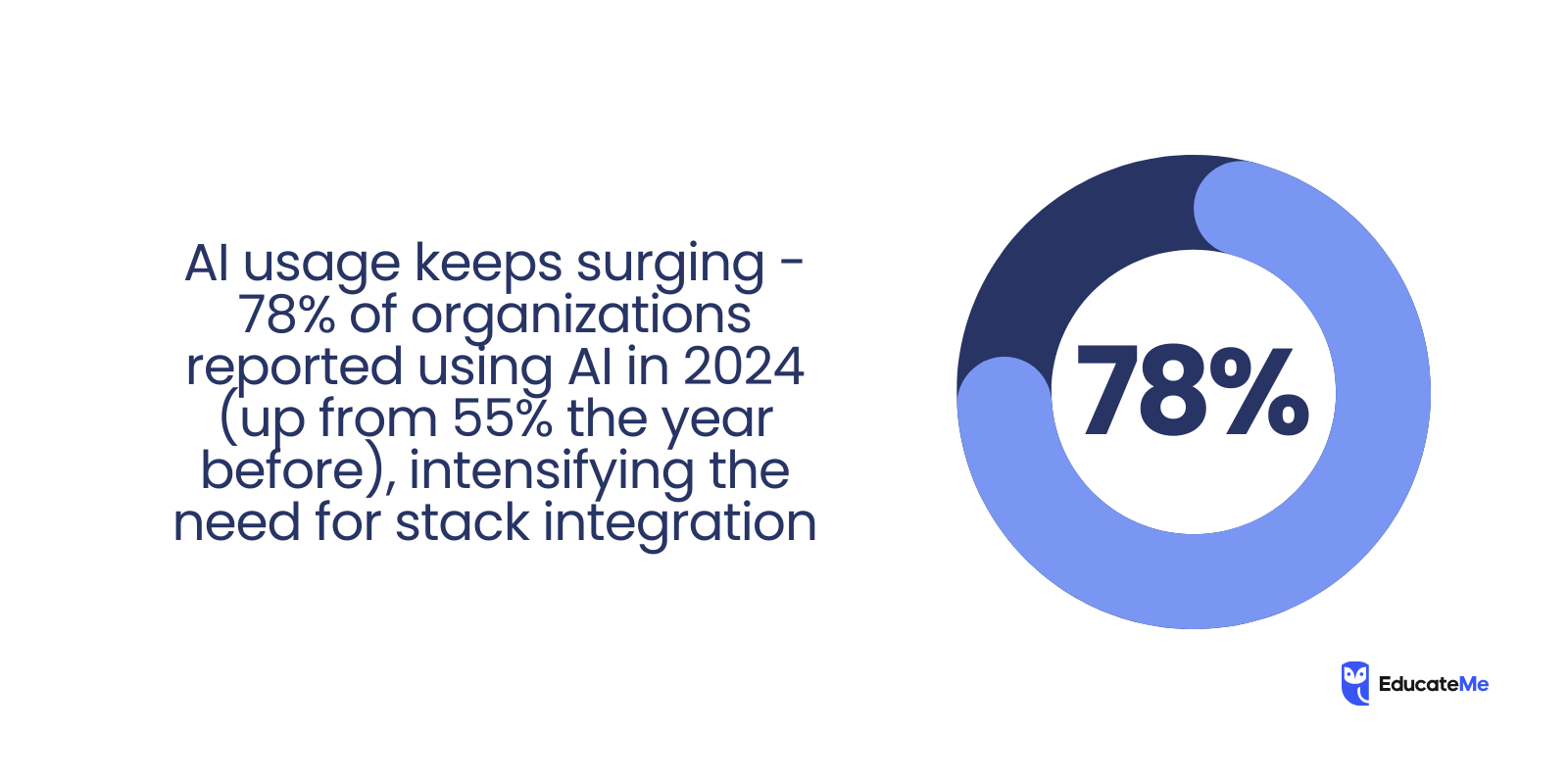

Stat to know: Only about 25% of companies have the full capabilities to scale AI (and by extension, capability-building) beyond pilots to tangible value, says the AI Adoption in 2024 Report.

The 2025 Operating Model for L&D

The organizations shipping results this year tend to converge on a simple but disciplined operating model. They act like product teams: they research, they prioritize, they ship minimum viable learning experiences, and they iterate with evidence. The calendar no longer dictates the roadmap; business outcomes do. L&D works in sprints with clear hypotheses, for example, “If frontline leaders use the new feedback model during shift handoffs for two weeks, first-time-fix will rise by two points and rework will drop by four.”

This model depends on lean governance. Instead of twelve sign-offs, you define a lightweight standards checklist for accessibility, brand, privacy, and data collection. Anything that passes the checklist can ship to a pilot audience quickly. Anything that fails gets reworked with guardrails, not delays. Your stakeholders will learn to trust L&D when they see a predictable cadence of small releases that actually move metrics.

What to Build Versus What to Buy

There’s never been more content available, and yet the “Netflix for learning” trap remains: consumption does not equal capability. The right move is to buy for foundational knowledge and compliance, but build for context and application. Industry-generic courses are fine for baseline literacy; your unique workflows, systems, and customer context need custom treatment.

A good rule of thumb is that off-the-shelf content should cover the “what and why” while your bespoke design targets the “how we do it here,” with scenarios, decision trees, and system walkthroughs that reflect your data, your screens, and your customers.

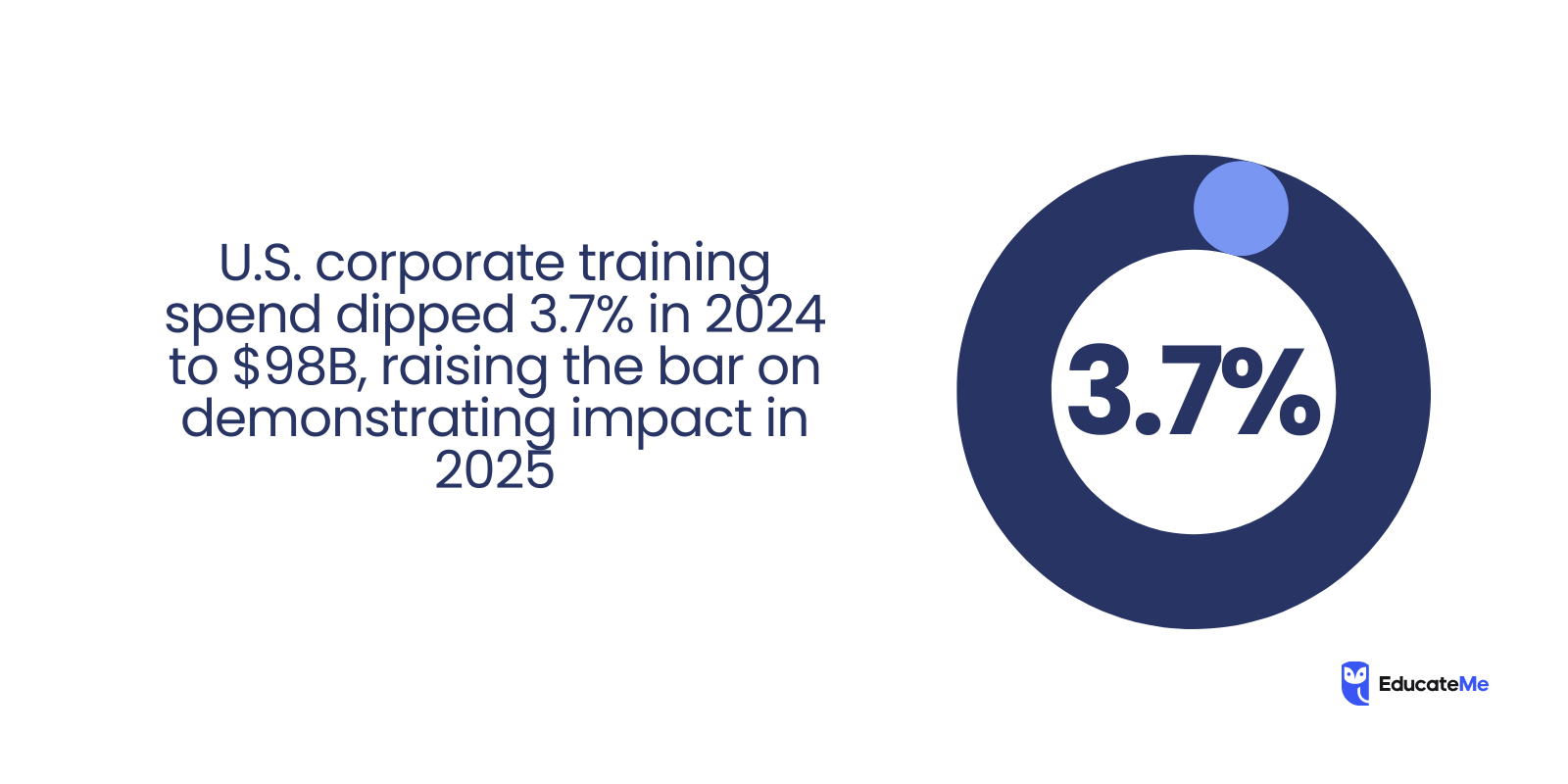

Stat to know: While overall U.S. training spend dipped in 2024, spend on outside products and services jumped 23%, signals to buy judiciously where it makes sense.

The AI-Augmented Learning Stack

The 2025 learning stack is simpler than it looks when you group it by jobs-to-be-done:

- System of Work. Where performance happens – CRM, code repos, ticketing, POS, productivity suites. This is where we want guidance and nudges to appear.

- System of Learning Record. The Enterprise LMS layer that stores attempts, completions, xAPI events, practice artifacts, and reflections. It should unify data across experiences, not just track compliance.

- System of Creation and Orchestration. Tools that let you draft, version, personalize, and sequence experiences. AI belongs here as a co-pilot for authoring, translation, question generation, and scenario branching.

- System of Insight. Analytics that connect learning signals with business outcomes. Your BI stack, experimentation tooling, and HRIS data live here.

When you evaluate platforms, ask how easily they embed into the system of work, how cleanly they emit structured data, and how well the orchestration layer uses AI to personalize without turning every path into an unmanageable snowflake. Personalization should be rule-based and explainable, for auditability and for learners’ trust.

Responsible AI in learning

AI now drafts micro-lessons, builds knowledge checks, summarizes playbooks, and acts as a conversational tutor. That power demands rigor. Establish an AI “model card” for your learning team: the data sources the assistants may use, the red-lines (no PII, no protected attributes, no copyrighted materials without license), the feedback loops for hallucination reports, and the human-in-the-loop checkpoints for high-stakes content. Document your prompt patterns and test cases. Treat generative content like any other code, versioned, reviewed, and rolled back if needed.

Stat to know: Violating the EU AI Act can trigger fines up to €35 million or 7% of global annual turnover for certain infringements, governance isn’t optional.

Most importantly, use AI where it’s natively strong: transforming existing, approved knowledge into different modalities; turning procedural documentation into step-by-step checklists; simulating customer dialogues; translating and localizing; and creating data-driven practice. Resist the temptation to have AI invent policy or make claims you can’t verify.

Stat to know: While 75% of organizations report having AI usage policies, fewer than 60% have dedicated governance roles or incident response playbooks, mind the policy-to-practice gap.

Design for Doing

Instructional design has always prized alignment, practice, and feedback. In 2025, the constraint is attention. The best teams are ruthless about designing for action and memory in tiny windows. Replace the “course first” mindset with a continuum of interventions: pre-work that makes tacit knowledge visible, job aids at the point of need, deliberate practice with feedback, and community reinforcement that keeps behaviors alive after the program ends.

Short doesn’t mean shallow. A five-minute experience can carry a surprising amount of weight if it teaches one decision pattern in context and asks the learner to apply it immediately. Build experiences around the moments that matter: the crucial email, the first 90 seconds of a call, the branch in the troubleshooting path. Anchor everything to those moments, and the learning will feel necessary rather than optional.

Blended Modalities that Actually Blend

Hybrid work has made asynchronous learning essential, but live sessions still have a unique power: psychological safety, peer connection, and rapid sense-making. Blend the two intentionally.

Put knowledge transfer and baseline practice in self-paced modules, then use live time for higher-order activities, coaching on recorded artifacts, feedback on real cases, calibration of standards, and commitment planning. When you do meet live, run short, focused clinics rather than long webinars, and require artifacts that prove application in the flow of work.

Stat to know: A U.S. Department of Education meta-analysis found blended learning outperforms purely face-to-face instruction on average.

Building a Coaching Culture Without Heroics

Most organizations try to “scale” coaching by asking managers to do more of it without changing their environment. A better move is to make feedback easy and safe. Provide lightweight rubrics tied to the capability model. Ask for small, specific observations rather than generic ratings. Let peers offer feedback on artifacts (code reviews, call snippets, incident timelines), using structured prompts that focus on decisions and trade-offs.

Finally, give managers a simple weekly cadence: one micro-conversation on goals, one on progress, one on a recent artifact. Leaders are more likely to stick with coaching when it’s clear, brief, and routine.

Measurement that Executives Believe

Executives distrust vanity metrics because they’ve seen them abused. Make your chain of evidence tight and modest. Start with activity to show reach, but move quickly to behavioral leading indicators (adoption of the new checklist, usage of the talk track, time to first successful runbook execution) and then to business outcomes (deal velocity, CSAT, mean time to resolution, scrap rate, safety incidents, audit findings).

Use control groups or phased rollouts where practical, and pre-register your success criteria so you aren’t tempted to cherry-pick. If the business runs on dashboards, show up in those dashboards. If it runs on quarterly business reviews, embed a learning slide that mirrors their format.

Onboarding that Halves Time-to-Productivity

Employee onboarding often collapses into a firehose of content, which delays contribution rather than accelerating it. Flip the structure. Define “day-10 wins,” “day-30 wins,” and “day-60 wins” that are observable in the real tools. For a sales development rep, that might mean loading a clean target list, running a compliant outreach sequence, and logging conversations with correct dispositions. For a platform engineer, it could be shipping a trivial change through the pipeline, rotating on call with a shadow, and solving a real support ticket under supervision.

Stat to know: Strong onboarding practices are associated with 82% higher retention and 70%+ productivity gains.

Design backward from those wins. Provide a short primer, a guided walk-through in the actual tools, a safe sandbox, and a simple checklist. Assign a buddy and a coach, each with distinct responsibilities. Strip out everything that is nice to know but not needed for the next win. The proof of success is not survey happiness; it’s the moment the new hire performs independently without creating rework.

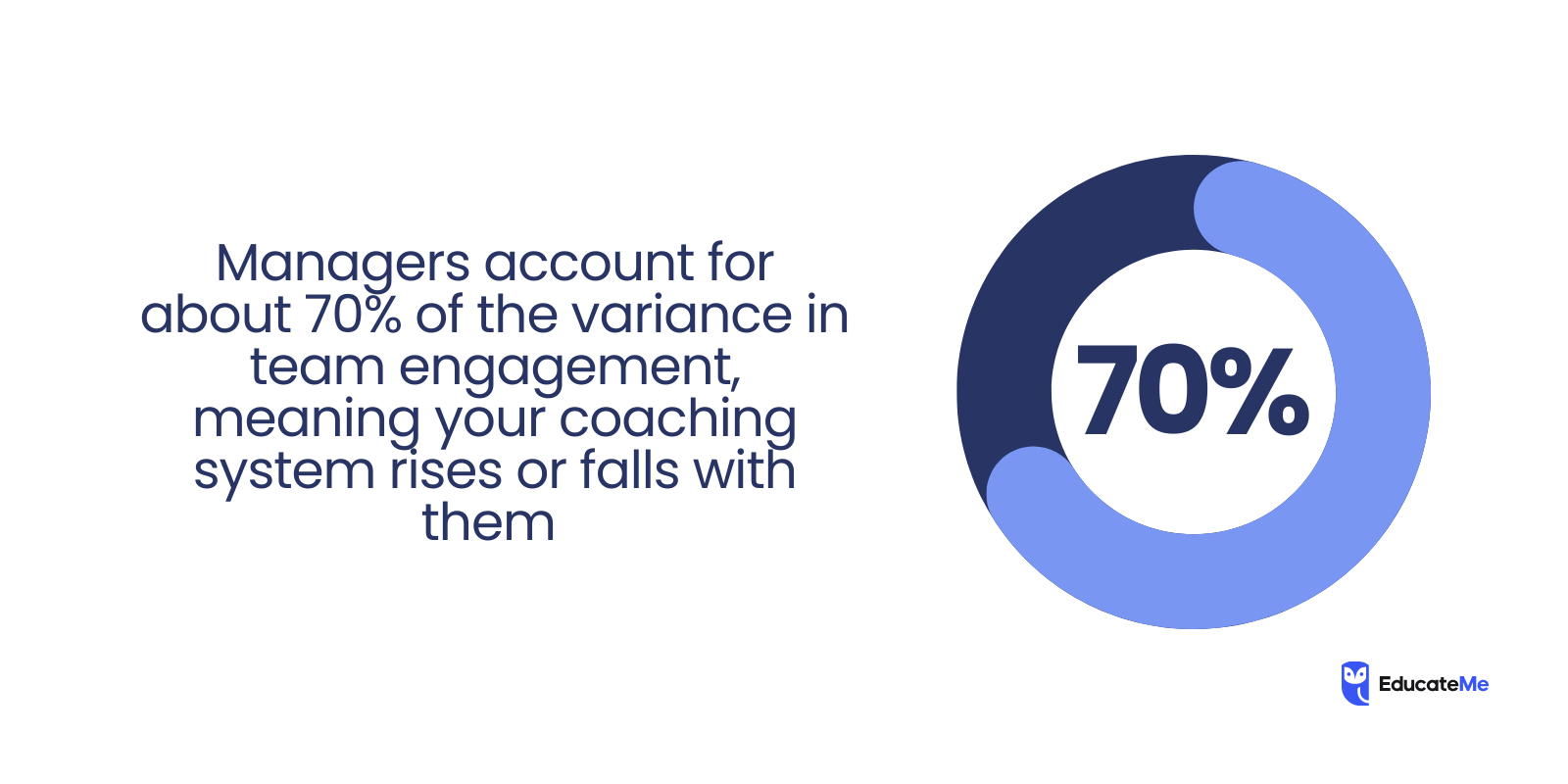

Building Managers Who Can Carry Culture

Middle managers are the multiplier, or the bottleneck. If you invest anywhere, invest here. Give them a clear model of leadership behaviors that match your strategy, a cadence for people operations that doesn’t depend on heroism, and tools for one-to-ones, feedback, and performance narrative writing.

Role-play the tricky conversations with AI, and let managers practice on anonymized real cases rather than hypotheticals. Most of all, align incentives: recognize and reward managers who build teams that perform sustainably, not just those who hit short-term numbers.

Compliance Without the Eye-Roll

Regulated businesses have to document training, but they don’t have to bore people. There are two keys. First, contextualize the rule with the risk it controls and the incident it prevents. Second, make the practice realistic: show the system screen where the decision happens; present the email that looks almost legitimate; make the checklist short enough that people will actually use it.

If your compliance training doesn’t change a single behavior or artifact in the system of work, it’s theater. Tie it to fewer incidents, faster audits, and cleaner handoffs, and leaders will defend the time. The best solution for dealing with regulation training is an LMS with built-in compliance features.

Global Scale, Local Nuance

Enterprises operate across languages, markets, and cultures. Translation is table stakes. Localization is the differentiator. Adjust examples, customers, currencies, and legal references. Respect regional leadership styles and decision rights.

Empower local enablement partners with templates, brand kits, and content blocks they can adapt without rebuilding from scratch. Create a “contribution back” loop so strong local adaptations become global upgrades rather than permanent forks.

Stat to know: Translation & localization within e-learning is a $36.9B segment (2024), projected to nearly $97.4B by 2030, evidence that localized learning is now a core capability.

Content Lifecycle Management, not Content Sprawl

Learning repositories tend to bloat. Treat content like inventory. Each item should have an owner, a purpose, a target audience, and an expiry or review date. Track usage and outcomes; archive or retire what doesn’t earn its keep.

Favor modular content blocks that can be recombined rather than monoliths that go stale. Maintain a single source of truth for each policy or playbook and let derivative experiences reference it rather than duplicating it. Your future self (and your audit team) will thank you.

The Data that Matters, and How to Get it

Saying “data-driven L&D” isn’t enough; you need a small, reliable set of signals. Start with exposure (who touched the experience), engagement (who completed or attempted practice), application (who used the behavior in the wild), and impact (what business metric moved). Application data is the hardest and most valuable. Get it by instrumenting the system of work, tags in CRM notes, fields in ticketing forms, flags in code reviews, or checkboxes on runbooks. You can then correlate cohorts who applied the behavior with those who didn’t.

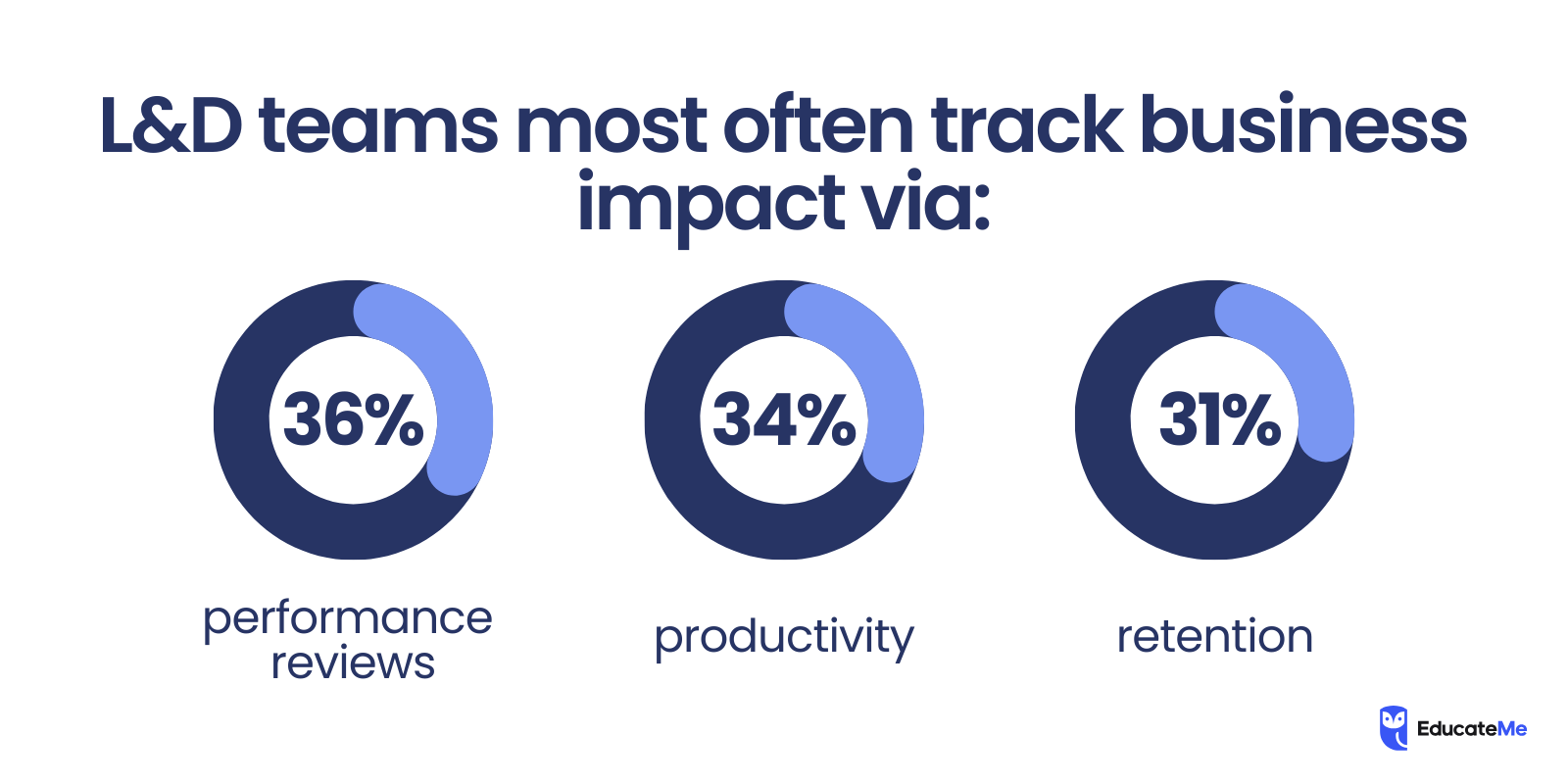

Stat to know: More than half of L&D teams still struggle to measure impact effectively – only 56% say they can do it, per a 2024 industry analysis.

Don’t forget qualitative data. Short reflective prompts captured at the moment of use (“What made this call hard?” “Which step of the runbook surprised you?”) surface obstacles that numbers miss. Feed those insights back into the next iteration.

Budgeting for Outcomes

In tight cycles, every investment competes with a revenue engine. Anchor your budget to cost avoided and value produced. Show how a manager enablement program prevents regrettable attrition that would cost twice the salary to replace, or how better first-line triage saves thousands of hours per quarter. Reuse platform capabilities wherever possible. Train a small internal guild to produce high-quality experiences with shared templates. And hold external vendors to the same standard you hold yourself: clear hypotheses, pilot metrics, and staged payments tied to adoption and outcome milestones.

Stat to know: The cost to replace an employee commonly ranges from 50% to 200% of annual salary, underscoring the ROI of capability and retention programs.

A Realistic Adoption Plan

Even great learning fails if people don’t show up. Work with internal comms to name the program, brand it, and tell a specific story about what problem it solves. Recruit early champions who help shape the pilot and then vouch for it.

Make enrollment the default where appropriate, and ensure reminders come from the learner’s manager, not just from a system. Celebrate small wins publicly, snippets of real artifacts, quick interviews with learners about what changed, dashboards that show progress toward the capability goal. Momentum beats mandate.

Accessibility and Inclusive Learning Design

Inclusive design is not only ethical and often legally required, but it also improves outcomes for everyone. Use plain language. Offer transcripts and captions. Ensure keyboard navigation and sufficient contrast. Provide alternatives for time-boxed activities and for learners in low-bandwidth environments.

Audit imagery and examples for stereotype risk. Invite employee resource groups to review high-visibility programs, and compensate them for the labor of that expertise. Accessibility is a bar you clear every time, not a feature you sometimes add.

Stat to know: Roughly 1.3 billion people – 16% of the world’s population - live with significant disability; accessibility is a scale, not an edge case.

Security and Privacy

Learning platforms and AI tools handle sensitive information. Partner early with security and privacy. Classify the types of data your team will process; restrict PII and sensitive business data; document data flows; and prefer vendors with clear, audited controls. For internally developed AI assistants, define retention, logging, and redaction. The upside is not just reduced risk – it’s faster approvals when stakeholders see L&D treat security as a design input rather than an obstacle.

Common Pitfalls to Avoid

The most frequent failure is confusing production with progress. A beautiful course library with low application is a liability. Another trap is over-personalization that fragments the experience and defeats measurement. Be skeptical of dashboards that stop at completions, of AI that promises outcomes without instrumentation, and of pilots that never graduate to standard operating procedures.

Stat to know: Despite enthusiasm for AI, only 11% of CIOs report it’s fully implemented in their org, beware shiny-object pilots that don’t operationalize.

Cultural pitfalls matter too. If leaders outsource capability entirely to L&D, the programs will feel optional. If L&D tries to own behavior change without line leadership, adoption will sag. You need a handshake: L&D designs the environment, leaders own the standard and the cadence that makes the behavior normal.

What “Good” Looks Like by This Time Next Year

If you execute the roadmap, by the end of 2025, your learning function should feel quieter and more effective. The catalog will be smaller but more targeted. Programs will ship as a rhythm, not as large, brittle launches. Managers will have a predictable coaching cadence, and learners will find guidance where they work rather than hunting for it. Most tellingly, your quarterly reviews will feature capability metrics side-by-side with business KPIs, and no one will ask “What does L&D do again?” because the answer will be visible in how the work gets done.

Stat to know: Organizations that blend modalities and instrument for outcomes are aligning with the 2024 trendline, where productivity and retention are top-tracked impact measures (34% and 31%).

Build the Engine, Not Just the Course

The last mile of enterprise learning is still the same: turning knowing into doing under pressure. What’s different in 2025 is that we finally have the parts to close the gap, instrumentation to capture application, AI to scale practice and feedback, and product-style ways of working that make improvement habitual. The real constraint is focus. Pick the few capabilities that matter most, design for the decisive moments, embed guidance where work happens, and hold yourself to the same evidentiary standards the rest of the business uses.

If that’s the engine, your LMS is the chassis it rides on. The right platform should embed in the flow of work, support scalable practice and coaching, emit clean data, and play nicely with your BI and HRIS. To help you translate this blueprint into tooling choices, head to our next piece: 18 Best Enterprise LMS Platforms for 2025, a practical rundown of options that can actually power the strategy you just read.